Understanding the Key Terms in the Immersive Technology Industry: AR, MR, XR, VR, and Spatial Computing

Augmented Reality (AR), Virtual Reality (VR), Mixed Reality (MR), Extended Reality (XR), and Spatial Computing each refer to different but overlapping concepts in the immersive tech space. While they represent discrete concepts, these technologies are part of an interconnected ecosystem that is transforming industries and creating new possibilities for businesses.

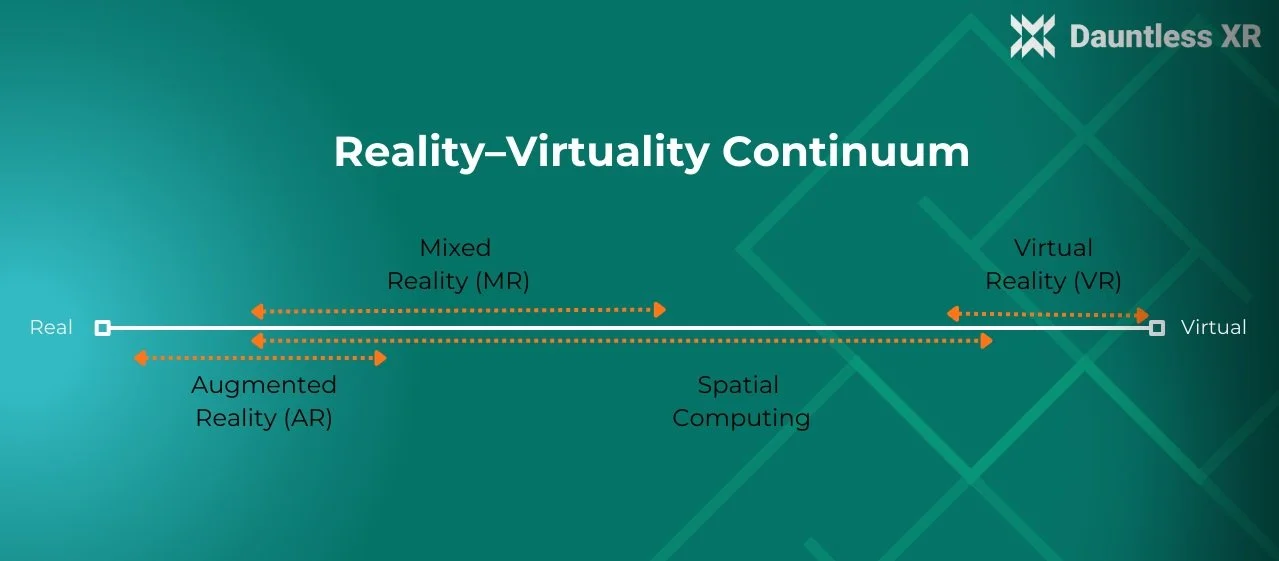

One of the key and most useful frameworks for understanding the different types of immersive experiences is the Reality–Virtuality Continuum (also known as the Reality-Virtuality Spectrum). This concept, introduced by Paul Milgram and Fumio Kishino in 1994, helps differentiate various forms of reality from fully physical (real) environments to entirely simulated (virtual) ones. But what exactly does this continuum represent, and how does it relate to the different immersive experiences in XR?

The Reality–Virtuality Continuum is often depicted as a spectrum, with Real Environment on one end, Virtual Environment on the other, and several mixed forms in between. The Reality–Virtuality Continuum emphasizes that these experiences aren’t just separate, discrete categories. Rather, they represent a gradient where the distinction between "real" and "virtual" is fluid.

At the far left of the spectrum lies the Real Environment, which refers to the physical world around us, experienced through our natural senses without any digital augmentation. Think of this as the baseline—the world as it is. In XR, this end represents no digital intervention at all; it's just reality in its purest form.

At the far right of the continuum lies Virtual Reality (VR), which immerses users in a completely digital environment. The goal of VR is to create a fully immersive experience, where the user’s senses are entirely consumed by the virtual environment. VR is ideal for simulations, gaming, training, and any experience where interaction with the real world is not necessary.

Let’s break down the common terms, define each one, including where it sits on the Reality-Virtuality Continuum, and explore how they're being used in real-world B2B applications.

Extended Reality (XR)

Definition: Extended Reality (XR) is an umbrella term that encompasses all immersive technologies, including AR, VR, and MR. XR is used to describe the entire spectrum of experiences that blend the physical and digital worlds. As such, XR is a catch-all term for any technology that alters the user's experience of reality, whether through virtual, augmented, or mixed environments. If you are unsure where on the continuum a technology falls, you can label it “XR”.

Example Device: Varjo XR-3.

A high-end XR headset that supports both virtual and mixed reality experiences. It’s designed for professional environments like aerospace, automotive, and medical sectors, where users can interact with high-fidelity simulations and real-world objects simultaneously.

B2B Example: Design & Collaboration. In architecture, the XR technology by companies like PTC helps teams collaborate across different locations in real-time by virtually "placing" designs into the physical world for review and feedback. This helps teams streamline decision-making and improves productivity.

Augmented Reality (AR)

Definition: Augmented Reality (AR) overlays digital information or virtual objects onto the real world in real-time. Unlike Virtual Reality (VR), which immerses the user in a completely virtual environment, AR enhances the physical world with interactive, computer-generated elements.

Example Device: XReal Air 2

The Xreal headset line is a prime example of augmented reality (AR) technology, as it overlays digital content onto the physical world to enhance user experiences. By using a lightweight and transparent display, the headset allows users to view 3D virtual elements while still interacting with their real environment. This creates a seamless blend of digital and physical spaces, making it ideal for applications in gaming, navigation, and productivity.

B2B Example: AURA. Immersive software developer Dauntless XR has an AR platform Aura, used for spatial data visualization. Aura ingests machine generated data and generates a contextually relevant shared XR experience. In the pictured example, a completed flight is visualized over a map at a tabletop scale for a trainee pilot and their instructor to debrief.

Mixed Reality (MR)

Definition: Mixed Reality (MR) allows digital content to not only overlay onto the physical world but also interact with it in real-time. In MR, virtual objects are anchored to the real world and can respond to environmental changes or user actions, creating a more seamless integration between the physical and digital realms. Occasionally, this term will also be used as an umbrella term in a similar vein to Extended Reality or XR.

Example Device: Microsoft Hololens 2

While primarily categorized under AR, the HoloLens 2 is also an example of MR, as it allows for highly interactive digital objects that appear to be integrated with the real-world environment.

B2B Example: Katana XR. Dauntless developed Katana XR, a guided workflow tool with web based authoring. Text instructions can be created on Katana Pro, and then are visible via XR devices. Enterprise users have access to Katana’s “Field Mode” where anchors (such as a QR code affixed to a set location on an airframe) can enable the digital objects in the workflow to interact with the physical airframe. In the example pictured, the mechanic sees the digital maintenance instruction content overlaid with the physical panel on the aircraft the step needs to be done on.

Katana XR

Digital maintenance instruction overlaid onto aircraft

Spatial Computing

Definition: Spatial Computing refers to the technology and processes that allow computers to understand, interact with, and simulate the physical world in 3D space. It includes the use of sensors, cameras, and processing algorithms to create spatially aware systems that can sense and respond to the physical environment. While AR, MR and Spatial Computing all aim to bridge the gap between the digital and physical worlds, Spatial Computing takes a more comprehensive approach by seamlessly integrating the physical and digital realms across various sensory modalities and interaction methods and is generally considered to be a broader term.

Example Device: Apple Vision Pro

Using a combination of sensors, cameras, and advanced machine learning, the Vision Pro maps and understands the user's environment in real-time, allowing for intuitive interactions with virtual elements as if they were part of the physical space. This capability enables the Vision Pro to deliver an unprecedented level of spatial awareness, where users can seamlessly blend digital tools, media, and applications into their surroundings while simultaneously controlling how much their surroundings they interact with.

B2B Example: Construction & Real Estate. Trimble uses spatial computing in its construction management software to improve project planning and site management. By integrating spatial data, teams can visualize construction projects in real-world spaces, improving accuracy and coordination.

Virtual Reality (VR)

Definition: Virtual Reality (VR) is a completely immersive experience that shuts out the real world and places the user in a fully digital environment. VR typically requires a headset and sometimes additional peripherals like controllers to interact with the virtual space.

Example Device: Meta Quest 3

A standalone VR headset that offers a fully immersive experience for games, training simulations, and virtual collaboration. It has the capability to leverage pass through to support mixed reality software applications, but it known currently as the dominate consumer VR headset.

B2B Example: Training and Simulation. VR is increasingly used in industries like healthcare, aviation, and manufacturing for training. For example, Boeing uses VR to train technicians on complex aircraft assembly without needing to use physical prototypes. This helps reduce training costs and improves safety.

Understanding this continuum helps both developers and users better understand where different XR experiences sit and how they can be best utilized. While these distinctions are important today, future devices will likely transition through all phases of the continuum, to best suit the user's experience and the labels will matter less. Until then it is important to be clear about what kind of technology you need or are looking for when exploring metaverse solutions.